Issue #53: Embracing cybersecurity in AI

Google's Project Zero team announces Project Naptime

Welcome to Issue #53 of One Minute AI, your daily AI news companion. This issue discusses a recent announcement from the Project Zero team at Google.

Introducing Project Naptime

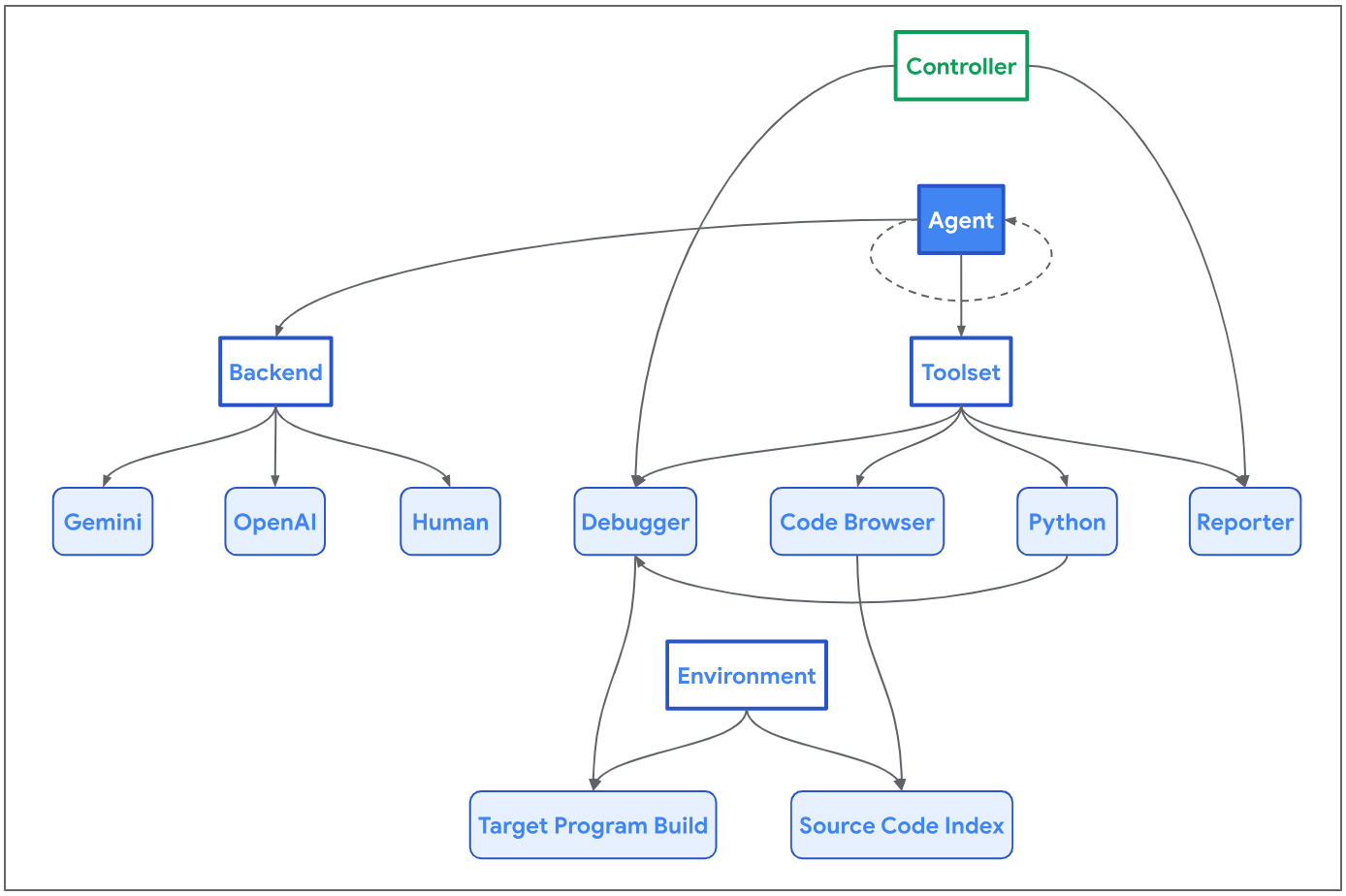

The Project Zero team at Google recently shared about Project Naptime, which explores the use of large language models (LLMs) in identifying security vulnerabilities. By refining the testing methodologies to align with the modern capabilities of LLMs, the team has improved LLMs' performance in discovering security flaws, particularly in memory safety. They propose several principles to enhance LLMs' effectiveness, including allowing extensive reasoning, interactive environments, specialized tools, perfect verification, and a strategic sampling approach. This project has substantially increased benchmark performance, with detailed examples and an architecture mimicking human security research workflows to better identify and analyze vulnerabilities.

The blog also reviews CyberSecEval 2, a benchmark suite by Meta that assesses LLMs' security capabilities. While current LLMs struggle with complex vulnerability research tasks, the modified approach by Project Zero shows promise in improving LLMs' performance. The evaluation results highlight the importance of realistic settings and robust tools in training LLMs to handle real-world codebases effectively. The continuous development and integration of advanced tools and environments are crucial to harness the full potential of LLMs in cybersecurity research.

Want to help?

If you liked this issue, help spread the word and share One Minute AI with your peers and community.

You can also share feedback with us, as well as news from the AI world that you’d like to see featured by joining our chat on Substack.