Issue #131: Robot dogs can now navigate the world with AI

Introducing LucidSim

Welcome to Issue #131 of One Minute AI, your daily AI news companion. This issue discusses recent research from MIT.

Introducing LucidSim

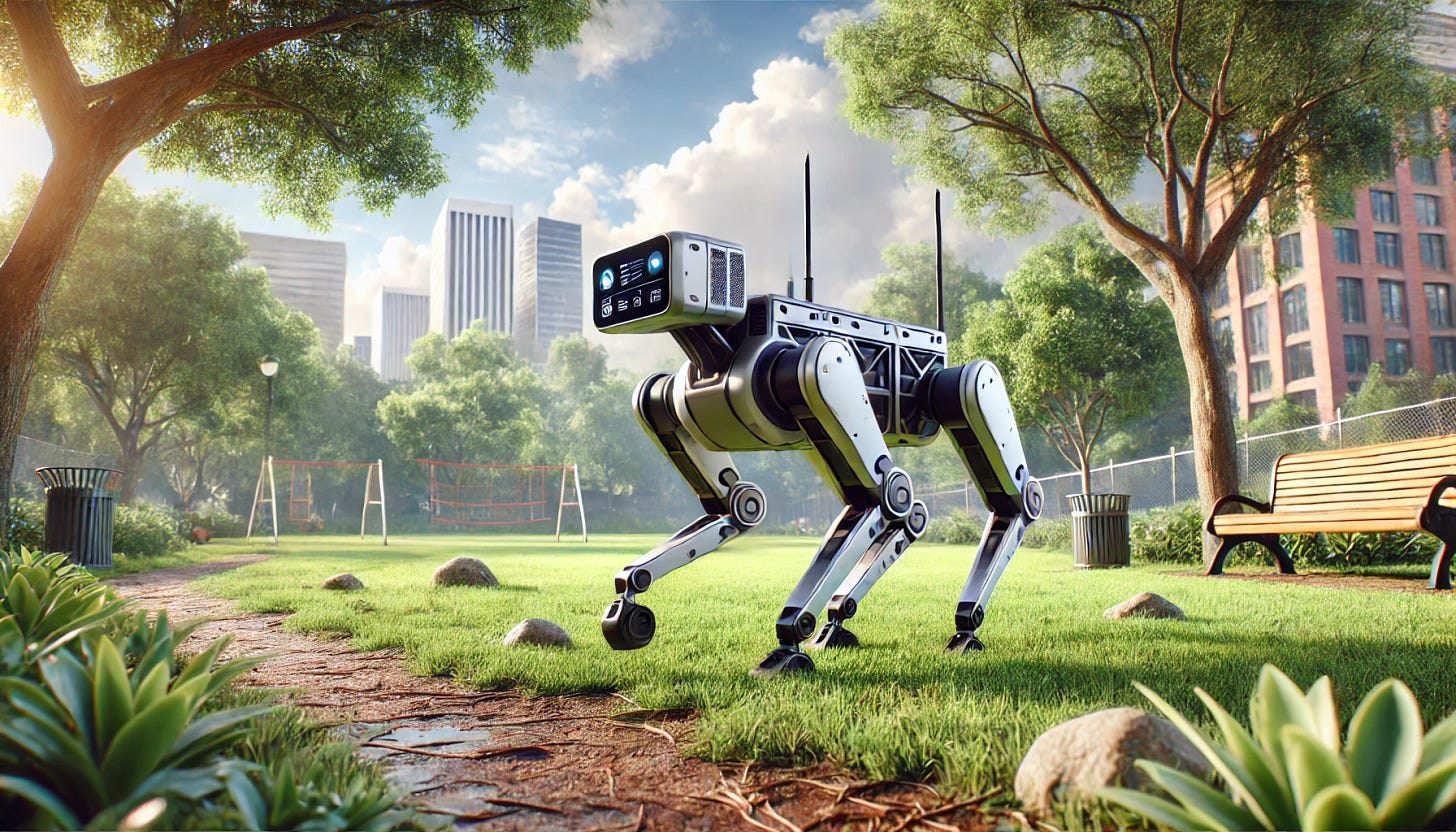

MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Institute for AI and Fundamental Interactions (IAIFI) have introduced LucidSim, a groundbreaking framework that trains quadruped robots, like robotic dogs, to perform parkour and navigate obstacles using only standard RGB camera inputs. Unlike traditional methods that rely on depth sensors or real-world data, LucidSim utilizes generative AI models to create realistic image sequences from the robot's perspective. This innovative approach enables robots to learn dynamic, complex tasks in simulated environments and transfer those skills directly to real-world scenarios without further tuning.

LucidSim’s success was showcased at the 2024 Conference on Robot Learning (CoRL), where researchers demonstrated a robot dog trained entirely in simulation successfully executing parkour maneuvers in various real-world environments. By combining generative models with physics simulators, LucidSim offers a new dimension of versatility and cost-efficiency in robotics training, setting a precedent for future research and applications.

Interested developers and researchers can access the project on GitHub or view live demonstrations on the project’s website.

Want to help?

If you liked this issue, help spread the word and share One Minute AI with your peers and community.

You can also share feedback with us, as well as news from the AI world that you’d like to see featured by joining our chat on Substack.